by Martin Brinkmann on December 21, 2018 in Companies – 1 comment

It appears that the fears of privacy and data protection advocates in regards to voice powered devices have come true; at least in a single case where Amazon leaked a customer’s voice data to another customer.

What happened? According (PDF) to German computer magazine CT, one of Amazon’s German customers requested access to the data the company had stored about him. Amazon sent the customer a zip archive with the data and the customer began to analyze it.

He noticed that the archive included about 1700 WAV files and a PDF document that contained Alexa transcripts. The customer did not own or use Alexa devices and concluded quickly, after playing some audio files, that the recordings were not his.

The customer contacted Amazon about the incident but nothing came out of it; he decided to contact CT and provided CT with a sample of the files. The audio recordings provided a great deal of information about the then-unknown Amazon customer including where and how Alexa was used, information about jobs, people, alarms, likes, home application controls, and transport inquiries.

CT created a profile of the user and was able to identify the customer, his girlfriend, and some friends, using it. CT contacted the customer and he confirmed that his voice was on the recordings.

Amazon told the magazine that the leak “was an unfortunate mishap that was the result of human error”. Amazon did contact both customers after CT contacted the company.

Privacy issue

Amazon stores Alexa voice data indefinitely in the cloud. The company does so to “improve its services”. The data may be used to identify owners of Alexa devices and others mentioned in recordings or audible when recordings take place. While it depends on how Alexa devices are used, it is clear that the recordings contain private information that most, if not all, customers would be very uncomfortable with if leaked to others.

Most owners of voice controlled devices are probably unaware, or indifferent, that their data is stored in the cloud indefinitely.

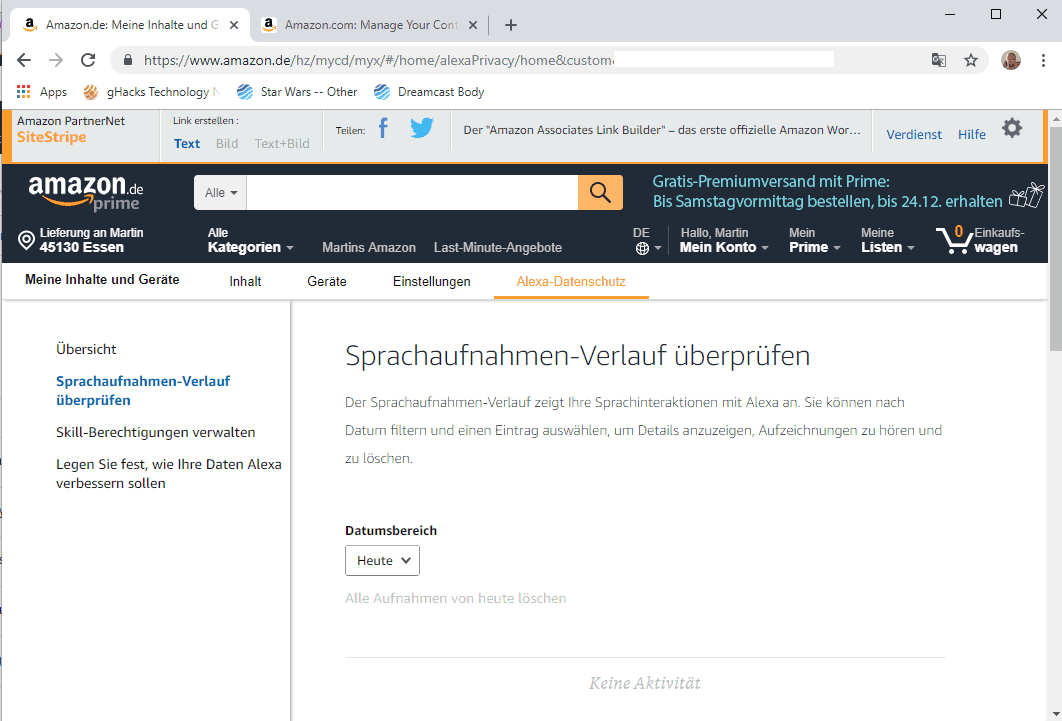

Amazon customers may delete voice recordings that Amazon has stored in the cloud on https://amazon.de/alexaprivacy/. I was not able to access the functionality on the main Amazon website, https://amazon.com/alexaprivacy/, as it redirected the request automatically.

The German page is accessible and provides options to delete recordings that Amazon has on file. There is no option, however, to block Amazon from storing recordings in the first place. It is unclear if the page works only for German customers or all Amazon customers.

Closing Words

Companies need to take human errors into account when it comes to privacy leaks and violations. The Amazon case demonstrates that leaks may happen for numerous reasons including successful hacking attempts, software error, or human error.

Now You: Do you use voice controlled devices?